Okay, so check this out—portfolio tracking in DeFi used to feel like putting together a jigsaw in the dark. Whoa! You had tokens scattered across chains, LP positions hiding in farm contracts, borrowed positions that blinked with changing collateral ratios, and price oracles that sometimes lied (ugh). My instinct said: there has to be a better layer between me and the chaos. Initially I thought a single dashboard would solve everything, but then I realized that tracking is not just aggregation—it’s context, simulation, and upfront risk control tied to how your wallet talks to dApps.

Here’s the thing. A good wallet does three things simultaneously: it represents ownership, it mediates intent, and it simulates consequences before you hit confirm. Really? Yes. Medium-term, if you can simulate a transaction on-chain-like before signing, you avoid dumb losses from slippage, failed calls, or malicious calldata. Long-term, coupling that simulation with a readable portfolio view (positions, P&L, exposure by chain, and open allowances) is what turns a wallet into a portfolio operating center rather than just a key manager.

I want to be practical. So I’ll walk through the real problems I kept running into, the technical ways to solve them, and the workflows I use now—warts and all. Something felt off about dashboards that only pull token balances but ignore allowances and pending transactions… and that omission bites. I’m biased, but wallet-level simulation and permission controls are the single biggest step forward for active DeFi users.

Problem first: fragmentation. Short answer: assets live everywhere. Medium answer: bridging, LPs, staking contracts, vault strategies, and derivative positions create a topology that’s both multi-contract and multi-chain. Longer thought: when your “portfolio” is really a graph of relationships—token held by address A, staked in contract B, collateralized for a loan in protocol C—you need an indexer plus semantic decoding to map positions into something human-manageable, and that requires more than a simple RPC balance lookup.

So how do you build a reliable portfolio picture? Start with a hybrid data model. Whoa! Use on-chain reads for canonical facts (balances, allowances, approvals, token contracts). Add subgraphs or indexed event scraping for historical positions and derivatives. Then layer in price oracles or market-data feeds to translate on-chain units to dollars. Finally, reconcile with pending mempool transactions so you don’t misreport a balance that will imminently change. This mix gives you a near-real-time position with fewer surprises.

Build the right mental model before integrating dApps

Okay, let’s slow down. Seriously? Yes—stop treating every dApp connection like a casual tap-to-connect. Your wallet should act like a protocol-aware gatekeeper. Medium sentence: when a dApp asks for an approval, you must know not only allowance size but intent—what contract will spend, for how long, and under what conditions. Longer: that means the wallet needs to show decoded calldata, simulate the transaction with the target contract state, and warn when patterns match known exploit vectors (infinite approvals, delegatecalls to unknown addresses, weird fallback usage).

On one hand, giving granular permission UI is product work—on the other hand, it’s security. Actually, wait—let me rephrase that: product teams that bake permission hygiene into UX reduce post-fact token revocation work by users, which means fewer social-engineering losses and less grief overall. My first run-in with a rogue aggregator taught me that permission fatigue is real; I had dozens of useless allowances that I ignored until one morning I didn’t. Lesson learned—proactively revoke.

Integration tips for engineers and power users: 1) support read-only “watch” accounts so analytics aren’t tied to a hot key; 2) implement transaction simulation in the signing flow; 3) show exact calldata decoding and state diffs; 4) surface allowance lineage so users can see why a dApp needs the spending power. These features change behavior. People stop carelessly approving, and they start verifying.

A practical workflow: how I track and act

Step one: create a watch-only aggregate. Short. Why? Because you want visibility without exposure. Medium: add every address you control and any contracts you interact with—LP token addresses, staking contracts, and vaults. Long: this makes it easy to tie a token balance to the place it actually matters; you’ll stop assuming your on-chain balance equals what you can instantly use.

Step two: keep allowances tidy. Wow! Every week, glance at active approvals and revoke the ones you don’t use. Medium: not all approvals are dangerous, but many are unnecessary and increase blast radius. Longer: consider using per-dApp spending limits and ephemeral approvals when possible, or delegate signing via session keys so your main key never authorizes repeated spend for unknown contracts.

Step three: simulate before signing. Whoa! Use a wallet that can replay the call against a node or a forked state and produce a readable failure reason or success trace. Medium: simulation exposes slippage, oracle-dependency failures, or gas drains before you get priced-out. Complex thought: when you simulate, you should see the gas profile, internal calls, ERC20 transfers, and state changes so you can validate whether a swap will actually execute as intended, or whether a multicall is exploiting ordering to sandwich you.

Why simulation is a game-changer

Here’s the thing. Simulating a transaction is not just about avoiding errors. Wow! It’s about seeing intent and consequences in action. Medium: it can show you how a router aggregates liquidity, if a permit is being abused, or whether a complex vault harvest will trigger a rebase event you didn’t account for. Longer: by combining simulation with mempool inspection and estimated post-tx state, you can also decide whether to rebroadcast with a different gas strategy, bundle via a relay, or abort to avoid an MEV sandwich.

If you value automation, simulation enables safe automation. Short. For example, a keeper bot that rebalances LPs should simulate before sending, else it risks executing on stale state. Medium: human traders gain the same advantage—simulate to confirm edge cases. Complex: this is why wallets that embed simulation and then surface human-friendly diffs reduce cognitive load and let users act with confidence instead of superstition.

Rabby wallet: a practical mention

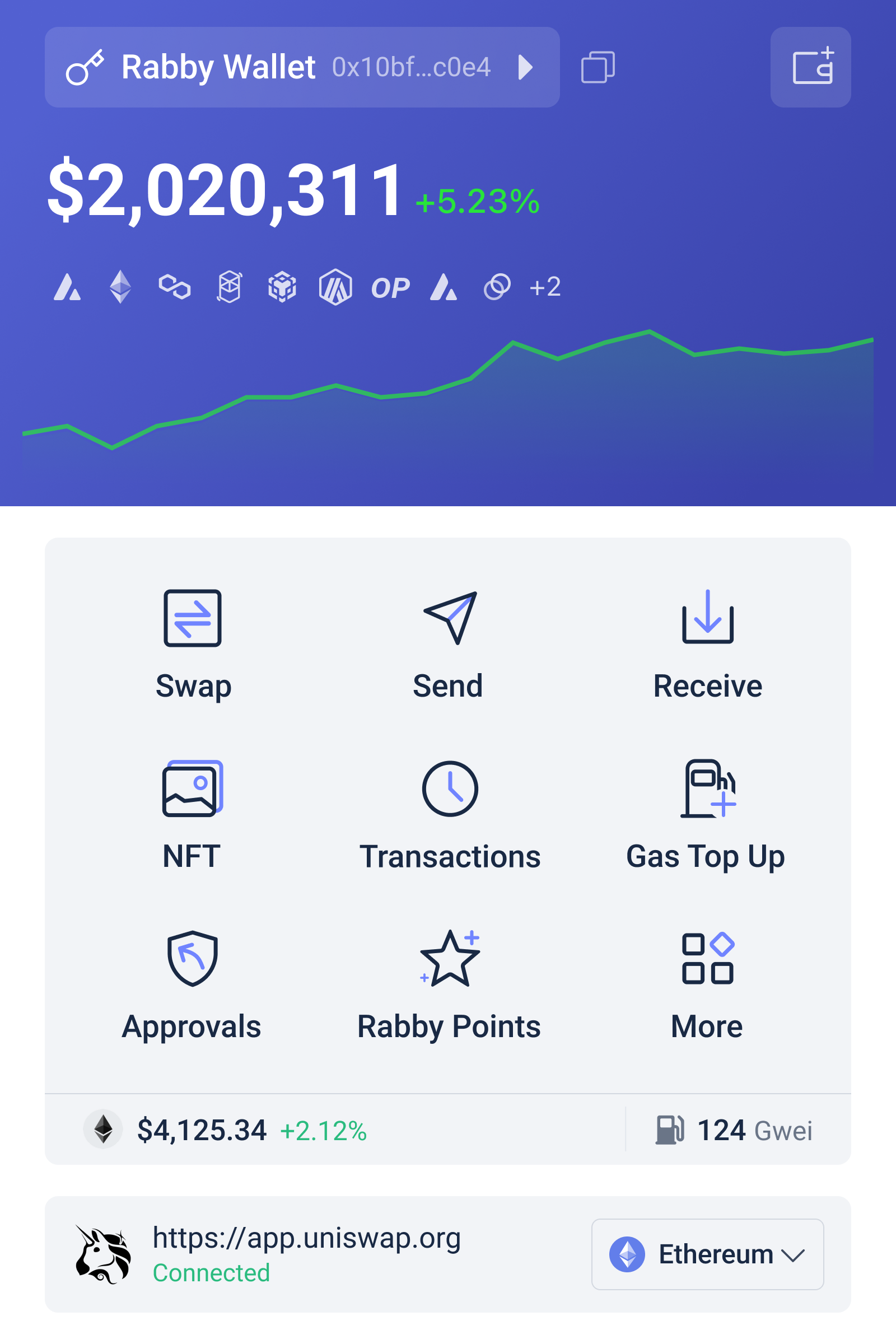

I bring this up because some wallets actually implement these features thoughtfully. Check out rabby wallet if you want a sense of how simulation, permission controls, and portfolio visibility can be integrated at the wallet layer rather than bolted on later. I’m not saying it’s perfect—nothing is—but the approach of treating the wallet as a smart mediator instead of a dumb key aligns with how I prefer to operate.

Practical note: when you try a wallet like that, test on small amounts and review the decoded calldata. Seriously—small tests save tears. I’m not 100% sure any single tool will be your end-all, but the ones that combine portfolio tracking, simulation, and granular permissions speed up decision-making and reduce accidental loss.

Advanced topics for power users

Derivatives and lending positions need special handling. Whoa! Net exposure isn’t just tokens; it’s collateralized borrowing, leverage, and short positions. Medium: your tracker must compute net delta across synths and borrowed assets. Longer: that means fetching health ratios, liquidation thresholds, and oracle dependencies—then presenting a single risk metric that you can act on via automated alerts or a rebalance workflow.

Cross-chain liquidity migrations are messy. Short. Use simulated dry-runs and timelocked bridging strategies. Medium: bridge contracts can have sequencing constraints and timelocks that, if misunderstood, strand liquidity. Complex: simulation across a forked source chain and a staging target chain helps detect race conditions and ensures your post-bridge positions match expectations.

Gas optimization matters. Wow! Batching, multicall composition, and relay bundling lower cost and reduce front-running surface. Medium: wallets that let you craft multicall transactions and simulate their stepwise effects help you squeeze inefficiencies out of complex operations. Long: combine that with strategic nonce management and optional private-relay submission when moving large positions to avoid mempool visibility and MEV pressure.

Checklist — what to look for in a DeFi portfolio wallet

– Multi-chain aggregation with contract-level decoding. Short. – Transaction simulation and decoded diffs. Medium. – Granular approval management and session keys. Medium. – Watch-only accounts and alerts. Medium. – Ability to replay transactions against a forked node for proof. Longer: this includes showing internal transfers and contract-level state changes so you can reason about composite operations.

Also, small heuristics: prefer wallets that log historical transaction simulations, surface failed attempt reasons, and let you attach notes to positions for manual record-keeping. Sounds nerdy, but when you’re managing multiple strategies across protocols, those breadcrumbs are golden.

Common questions I get asked

Q: How often should I revoke approvals?

A: Weekly if you actively trade; monthly if you’re passive. Whoa! If you use a lot of aggregators, check after each interaction. Medium: prioritize big approvals and contracts you don’t recognize. Longer: automate reminders or use a wallet that flags approvals older than X days so you don’t accumulate very very risky permissions.

Q: Is simulation foolproof?

A: No. Short. Simulation approximates the on-chain outcome based on the node’s current state; mempool reorgs and off-chain oracle updates can still change results. Medium: it’s a strong mitigation, not absolute protection. Complex: use simulation plus private submission or bundling for high-value ops to reduce MEV and front-running risk.

BÀI VIẾT LIÊN QUAN

Expérience technique avec Betify

Gods of Plinko – Luxe entertainment geboden

Guida Dedicata su la Slot Machine Gods of Plinko

Interpretazione Peninsulare su Gods of Plinko

Gods of Plinko – Panorama complet pour les joueurs français

Gods of Plinko – Donde la emoción cobra vida

Roulette Revolution Casino: Dalla Europea alla Americana 2026

Revolution Casino Recensioni 2026 – Opinioni Reali dei Giocatori